API Gateway vs Load Balancer in Microservices Architectur

Difference between API Gateway and Load Balancer in Microservices?

Hello folks, what is the difference between API Gateway and Load balancer is one of the popular Microservice interview questions, and is often asked to experienced Java developers during telephonic or face-to-face rounds.

Codemia.io (60% OFF) (Sponsored)

Codemia.io is a hands-on system design learning platform that helps you practice designing real systems step-by-step.

It gives you challenges like designing YouTube, WhatsApp, or URL Shorteners and provides guided feedback as you iterate.

If you are preparing for a System Design interview, then you can use Codemia.io to solve real problems and learn what it takes to explain your solution on real interview. Their platform is both AI-powered and gives you tools to architect and explain your solution.

Though you must know what API Gateway and load balancer are before you can talk about their differences. If you are new to these two terms or concepts, then I suggest you first watch this video on API Gateway from ByteByteGo to learn the basics

And, this one to learn about Load balancers from Exponent, another great site and channel to learn system design concepts.

In the past, I have shared essential Microservices design principles and best practices as well as popular Microservices design patterns, and in this article, I will answer this question and also tell youthe key differences between them.

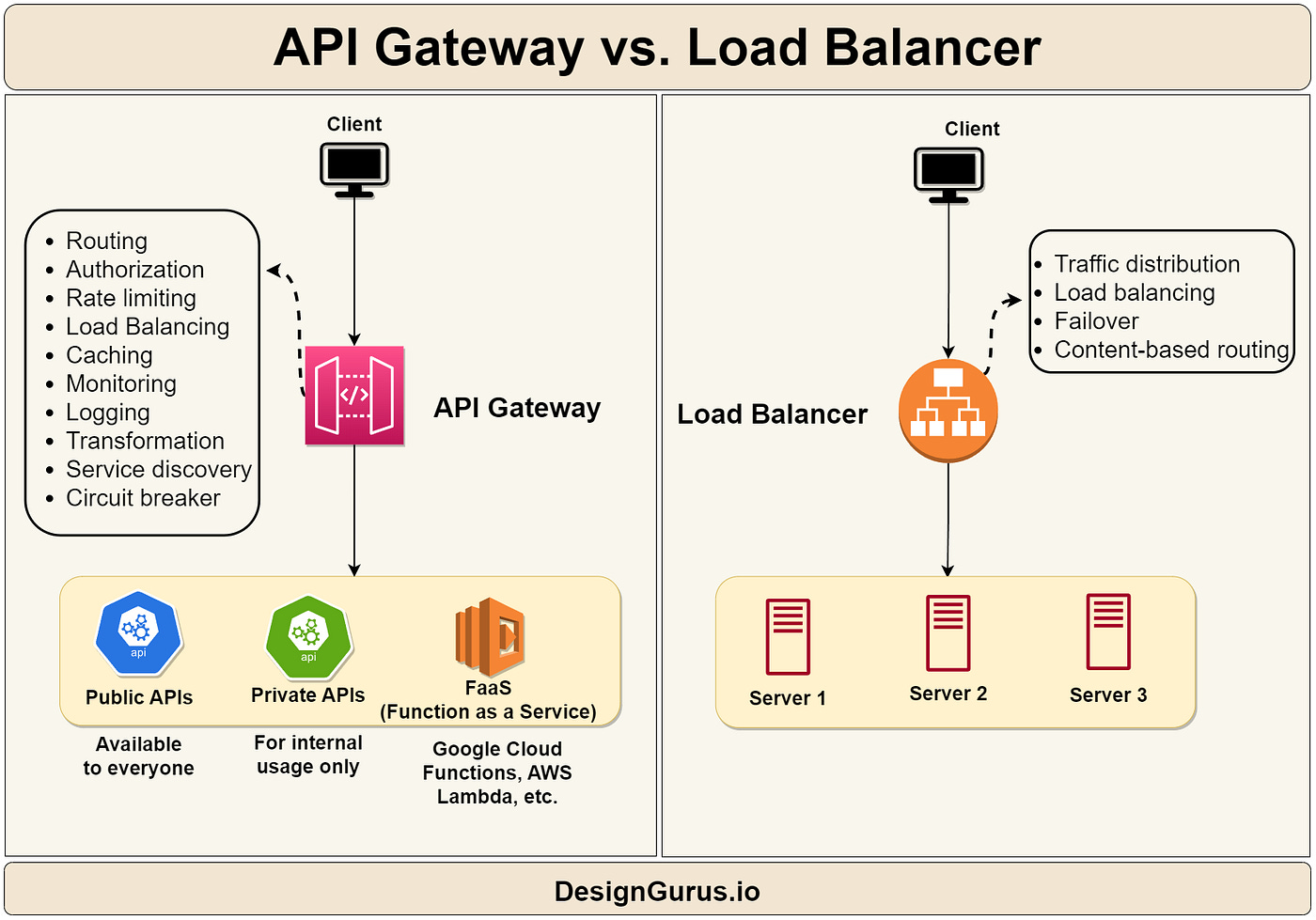

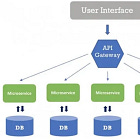

While API Gateway and Load Balancer are both important components in microservices architecture, they serve different purposes.

API Gateway acts as a single entry point for all API requests and provides features such as request routing, rate limiting, authentication, and API versioning, and also hides the complexities of the underlying microservices from the client applications.

Load Balancer, on the other hand, is responsible for distributing incoming requests across multiple instances of a microservice to improve availability, performance, and scalability. It helps to evenly distribute the workload across multiple instances and ensures that each instance is utilized to its fullest potential.

In other words, API Gateway provides higher-level features related to API management, while Load Balancer provides lower-level features related to traffic distribution across multiple instances of a microservice.

Now that you know the basics, let’s see each of them in a bit more detail to understand the differences as well as learn when to use them.

By the way, if you are preparing for System design interviews and want to learn System Design in depth, then you can also check out sites like ByteByteGo, DesignGuru, Exponent, Educative, bugfeee.ai, and Udemy, which have many great System design courses.

What is the API Gateway in Microservices?

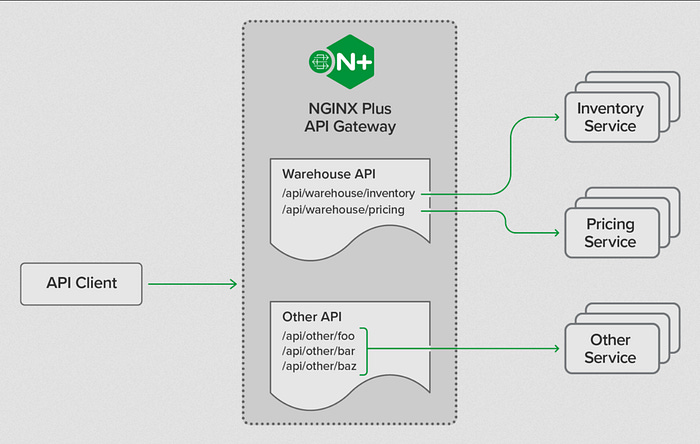

API Gateway is one of the essential patterns used in microservices architecture that acts as a reverse proxy to route requests from clients to multiple internal services.

It also provides a single entry point for all clients to interact with the system, allowing for better scalability, security, and control over the APIs.

API Gateway handles common tasks such as authentication, rate limiting, and caching, while also abstracting away the complexity of the underlying services.

Things will be clearer when we go through a real-world scenario where API Gateway can be used, so let’s do that.

Let’s assume you have a microservices-based e-commerce application that allows users to browse and purchase products. The application is composed of several microservices, including a product catalog service, a shopping cart service, an order service, and a user service.

To simplify the interaction between clients and these microservices, you can use an API gateway. The API gateway acts as a single entry point for all client requests and routes them to the appropriate microservice.

For example, when a user wants to view the product catalog, they make a request to the API gateway, which forwards the request to the product catalog service. Similarly, when a user wants to place an order, they make a request to the API gateway, which forwards the request to the order service.

By using an API gateway, you can simplify the client-side code, reduce the number of requests that need to be made, and provide a unified interface for clients to interact with the microservices.

When to use API Gateway?

Now that we know what an API Gateway is and what services it provides, it's pretty easy to understand when to use it. API Gateway can be used in various scenarios, like

Providing a unified entry point for multiple microservices: API Gateway can act as a single entry point for multiple microservices, providing a unified interface to clients.

Implementing security: API Gateway can enforce security policies, such as authentication and authorization, for all the microservices.

Improving performance: API Gateway can cache responses from microservices and reduce the number of requests that reach the back-end.

Simplifying the client: API Gateway can abstract the complexity of the underlying microservices and provide a simpler interface for clients to interact with.

Versioning and routing: API Gateway can route requests to different versions of the same microservice or different microservices based on the request parameters.

So, you can see that it’s doing a lot more than just forwarding your request to Microservices, and that’s probably the main difference between API Gateway and Load balancer.

What isa Load Balancer? What Problem it solve?

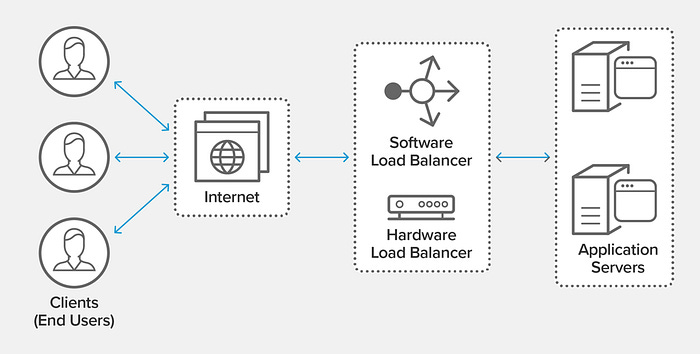

A load balancer is a component that distributes incoming network traffic across multiple servers or nodes in a server cluster, not just in a Microservices architecture but any architecture.

It helps to improve the performance, scalability, and availability of applications and services by evenly distributing the workload among the servers.

In this way, a load balancer ensures that no single server is overloaded with traffic while others remain idle, which can lead to better resource utilization and increased reliability of the overall system.

Here is a nice diagram which shows how a load-balancer works and how it can be used at both the hardware and software levels as well:

When to use a load balancer in Microservices?

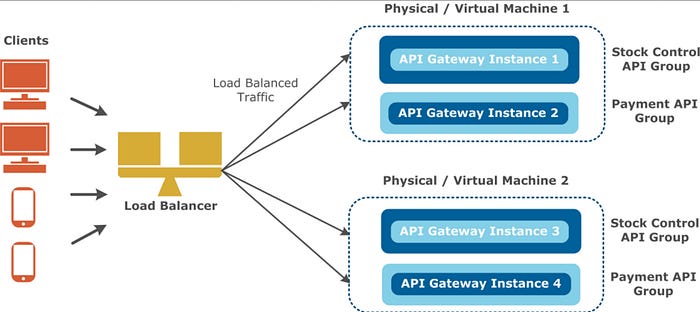

As I said, Load balancers are primarily used in microservices architecture to distribute incoming network traffic across multiple instances of the same service.

Load balancers can help to increase the availability and scalability of the application, and that’s why they have been around for quite some time, even before the Microservices architecture.

Load balancers are also used when the traffic to the application becomes too high, and a single instance of the service is unable to handle the load. Load balancers are also used when the application is deployed across multiple servers or data centers.

Pros and Cons of Load Balancer in Microservices

Now, let’s see the pros and cons of using Load balancers in a microservices architecture:

Pros:

Improved performance and scalability: Load balancers distribute traffic evenly across multiple servers, reducing the load on each server and ensuring that no server is overwhelmed.

High availability and fault tolerance: Load balancers can automatically redirect traffic to other healthy servers if one server fails, ensuring that services remain available even if some servers go down.

Flexibility and customization: Load balancers can be customized with different algorithms and rules to handle traffic in a way that best suits the specific needs of the system.

Cons:

Complexity and cost: Load balancers can add complexity and cost to the system, as they require additional hardware or software to manage and maintain.

Single point of failure: If the load balancer itself fails, the entire system may become unavailable.

Increased latency: Depending on the setup, load balancers can add some latency to the system by adding an additional hop between clients and servers.

Difference between Load Balancers and API Gateway in Microservice Architecture

Here are 10 differences between API gateway and load balancer in point-by-point format:

1. Purpose

The primary purpose of an API gateway is to provide a unified API for microservices, while the primary purpose of a load balancer is to distribute traffic evenly across multiple servers.

2. Functionality

An API gateway can perform several functions, such as routing, security, load balancing, and API management, while a load balancer only handles traffic distribution.

3. Routing

An API gateway routes requests based on a predefined set of rules, while a load balancer routes requests based on predefined algorithms, such as round-robin or least connections.

4. Protocol Support

An API gateway typically supports multiple protocols, such as HTTP, WebSocket, and MQTT, while a load balancer only supports protocols at the transport layer, such as TCP and UDP.

5. Security

An API gateway provides features such as authentication, authorization, and SSL termination, while a load balancer only provides basic security features such as SSL offloading.

6. Caching

An API gateway can cache responses from microservices to improve performance, while a load balancer does not offer caching capabilities.

7. Transformation

An API gateway can transform data between different formats, such as JSON to XML, while a load balancer does not provide data transformation capabilities.

8. Service Discovery

An API gateway can integrate with service discovery mechanisms to dynamically discover microservices, while a load balancer relies on static configuration.

9. Granularity

An API gateway can provide fine-grained control over API endpoints, while a load balancer only controls traffic at the server level.

10. Scalability

An API gateway can handle a high number of API requests and manage the scaling of microservices, while a load balancer only provides horizontal scaling capabilities.

Now that you have a good idea, if you want to better understand the concept, you can also watch this video from ByteByteGo to learn about the difference between API Gateway and Load Balancer, but also reverse proxy.

Java and Spring Interview Preparation Material

Before any Java and Spring Developer interview, I always used to read the following resources

Grokking the Java Interview: click here

I have personally bought these books to speed up my preparation.

You can get your sample copy here, check the content of it, and go for it

Grokking the Java Interview [Free Sample Copy]: click here

If you want to prepare for the Spring Boot interview you follow this consolidated eBook, it also contains microservice questions from spring boot interviews.

Grokking the Spring Boot Interview

You can get your copy here — Grokking the Spring Boot Interview

That’s all about the difference between API Gateway and Load Balancer in Microservices. As I said, API Gateway provides higher-level features related to API management, like security, versioning, single entry point, while Load Balancer provides lower-level features related to traffic distribution across multiple instances of a microservice.

So, if you need more than just forwarding a request to multiple instances, you should use API Gateway instead of Load balancer but if your requirement is just to distribute incoming traffic to multiple application instances, just use the Load Balancer.

By the way, if you are preparing for System design interviews and want to learn System Design in depth,h then you can also check out sites like ByteByteGo, DesignGuru, Exponent, Educative, bugfeee.ai, and Udemy, which have many great System design courses.

Other Microservices and System design articles you may like